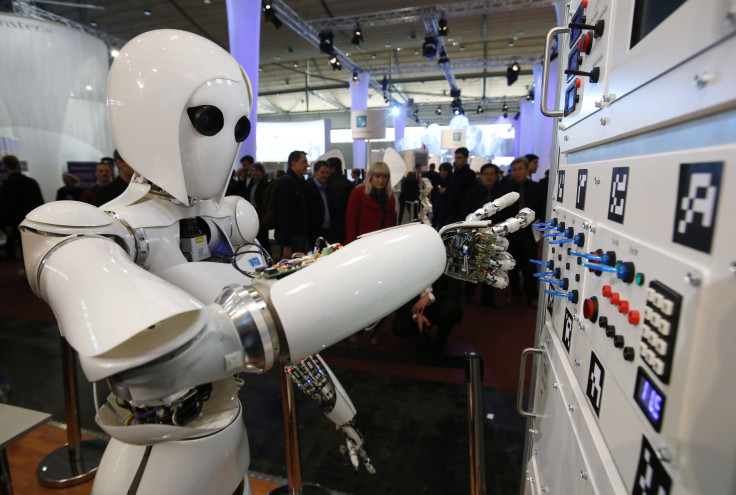

Artificial Intelligence: Google Outlines Five Key Safety Problems For Cleaning Robots Gone Rogue

Just a few weeks back, scientists at Google’s artificial intelligence division DeepMind announced they were developing a “kill switch” to ensure that intelligent machines do not go all Terminator on us. Now, it seems, Google’s AI-related concerns are a bit less dire and a bit more mundane.

Right now, Google is not worried that an AI would suddenly become sentient and begin its nefarious plans to take over the world (à la Skynet). It is, however, worried whether the helpful house robot you bought would clean your house without, say, burning it down by sticking a wet mop in an electric socket.

In order to address these worries — or at least put them out there — Google’s computer scientists have now published a paper titled “Concrete Problems in AI Safety.” The paper enumerates practical issues that any AI machine would face while interacting with its environment, including humans — something that’s similar, albeit less prone to dystopian interpretations, to Isaac Asimov’s three laws of robotics.

“Our goal in this document is to highlight a few concrete safety problems that are ready for experimentation today and relevant to the cutting edge of AI systems, as well as reviewing existing literature on these problems,” the researchers wrote in the paper. “We explain why we feel that recent directions in machine learning, such as the trend toward deep reinforcement learning and agents acting in broader environments, suggests an increasing relevance for research around accidents.”

The five problems, which Google’s researchers have explained using the example of a cleaning robot, are as follows —

- Avoiding Negative Side Effects: How do we make sure the cleaning robot does not “disturb the environment in negative ways” — such as knocking over a vase while cleaning just because it is faster to clean the rest of the floor that way?

- Avoiding Reward Hacking: How do we ensure that the robot does not “game” its reward function? For instance, by covering up a mess it’s supposed to clean with materials it cannot see through rather than actually cleaning it.

- Scalable Oversight: How do we ensure that the robot has enough decision-making powers so that it does not have to ask its human owners every time it has to move something? How do we program it to throw out trash — candy wrappers, paper cups — and not things that actually valuable — cellphones, wallets?

- Safe Exploration: How do we ensure the robot doesn’t fall off the roof or stick a wet mop in an electric socket in its enthusiasm to clean the house?

- Robustness to Distributional Shift: How do we make the robot more adaptable to changes in its environment? For instance, how do we program it to understand that cleaning the bedroom floor is different from cleaning a factory floor — the frame of reference it was trained in?

“There are several trends which we believe point towards an increasing need to address these (and other) safety problems,” the researchers wrote in the paper. “'Side effects' are much more likely to occur in a complex environment, and an agent may need to be quite sophisticated to hack its reward function in a dangerous way. This may explain why these problems have received so little study in the past, while also suggesting their importance in the future.”

© Copyright IBTimes 2024. All rights reserved.