Eric Schmidt Or Elon Musk, Who Is Right About Future Artificial Intelligence?

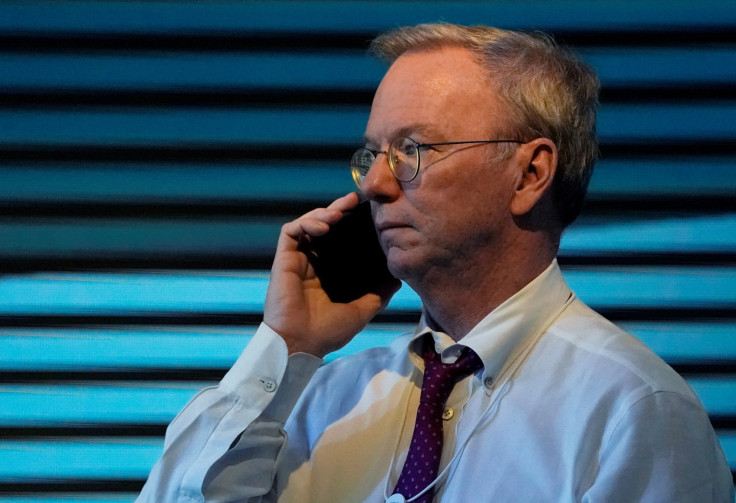

Eric Schmidt, the former chairman of Google’s parent company Alphabet and now its technical adviser, joined the list of people Friday who oppose Tesla and SpaceX CEO Elon Musk’s views about the future of artificial intelligence. Musk has warned that AI, if unregulated, will eventually become an existentialist threat to humanity, and his opinion has both famous supporters, like the late Stephen Hawking, and dissenters like Schmidt.

Speaking at the VivaTech conference in Paris on Friday, Schmidt’s comments were in response to a question about Musk’s dire warnings about AI.

“I think Elon is exactly wrong. … He doesn’t understand the benefits that this technology will provide to making every human being smarter. The fact of the matter is that AI and machine learning are so fundamentally good for humanity,” Schmidt said, adding he shared Musk’s concerns about the potential for misuse of technology.

“He is concerned about the possible misuse of this technology and I am too. … The example I would offer is, would you not invent the telephone because of the possible misuse of the telephone by evil people? No, you would build the telephone and you would try to find a way to police the misuse of the telephone,” he said.

In the past, Musk has called AI “our biggest existential threat” and likened AI research to “summoning the demon.” More recently, he said AI was a greater threat than North Korea and could even lead to World War III. At the same time, he hasn’t called for development of AI to be abandoned, but regulated instead, before it gets out of hand.

Since Musk’s own businesses, especially Tesla, relies on AI, it would make sense for him to support AI’s development. He has said Tesla vehicles would be able to drive themselves fully, including taking owners to their destinations without even needing to be told the destination. Musk has also invested in Vicarious, a company working on a neural network-based computer, a system that would allow it to think like a human.

Most big technology companies today, including Musk’s and Google and Facebook (Mark Zuckerberg is another enthusiastic supporter of AI, having previously called Musk’s warnings “ pretty irresponsible”) and several others, are increasingly relying on AI for a variety of tasks.

So, which view is right? It is not possible to say just yet, especially because we are talking about the future of AI, and since we don’t have time machines (yet), knowing the future is impossible. Even the idea of what AI actually means is different to different people.

For instance, Rodney Brooks, who set up the Computer Science and Artificial Intelligence Lab at the Massachusetts Institute of Technology, told TechCrunch in an interview in July 2017 that most AI alarmists, including Musk and Hawking, don’t work in AI themselves. For those who work in the field of AI, the problems of creating something at a product level are only too obvious.

It is common consensus, Musk included, that the point when machines will be smart enough to outthink humans is a long way off in the future (if it ever happens). A less ubiquitous belief is about whether machines, once they reach that point, will want to dominate (or even exterminate) humans — a staple scenario of many a dystopic science fiction stories. What will actually happen? Only time will tell.

© Copyright IBTimes 2024. All rights reserved.