Google Pixel Camera Software Was Originally Made For Google Glass

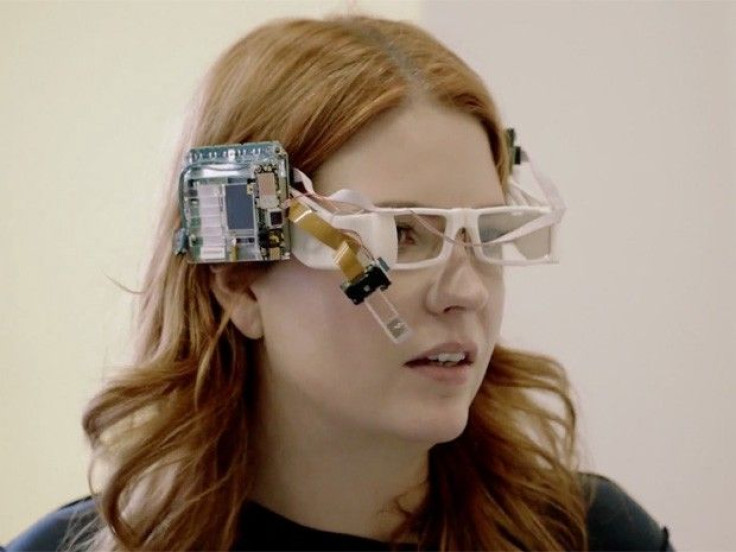

Google Pixel’s cameras were one of the best smartphone cameras of 2016, receiving the highest score on DxOMark, which called them “the best smartphone cameras ever made.” On Friday, Google parent Alphabet's X division revealed that the device inherited its camera software from the now shelved Google Glass project.

“Because Glass needed to be light and wearable, creating a bigger camera to solve these challenges wasn’t an option. So the team started to ask — what if we looked at this problem in an entirely new way? What if, instead of trying to solve it with better hardware, we could do it with smart software choices instead?” The division highlighted the development of the Google Glass camera in its blog post Friday.

Read: Google Glass 2 Arrives For Businesses

The Google Pixel came with a 12-megapixel rear camera with a Sony IMX 378 sensor and an 8-megapixel front camera with a Samsung S5K4H8/Omnivision OV8856 sensor. But, the hardware alone does not deserve all the credit for the cameras’ performance. Google was able to get the most out of the Google Pixel hardware by using a special software called Gcam.

The development of Gcam started in 2011, in the quest for a camera that could fit into the Google Glass — an AR-enabled pair of glasses made by Google with a camera attached. The reason the special camera needed to be developed was that the purpose of the Google Glass was to give the wearer the ability to shoot photos from a first-person vantage point and share the experience easily. The fact that the company could only fit in a small camera on the glass and had to get the light, focus and image sensing correct on very limited battery power was a challenge it needed to overcome to make the Google Glass work.

This led to the company’s use of computational photography techniques, which, in turn, helped create software-based image capturing and processing.

Another technique explored by the Gcam team was image fusion, which involved taking a sequence of shots and then compiling them into a single, higher quality image. This technique helped the Google device take more detailed shots, even in low light. The same technology was appropriated to the Nexus 5 and Nexus 6 Android camera apps and finally rolled out to the Google Pixel smartphone.

Read: Google Pixel Has EIS, Not OIS

Gcam’s next project includes machine-learning capabilities and how they could work in conjunction with computational photography.

“One direction that we’re pushing is machine learning. There’s lots of possibilities for creative things that actually change the look and feel of what you’re looking at. That could mean simple things like creating a training set to come up with a better white balance. Or what’s the right thing we could do with the background — should we blur it out, should we darken it, lighten it, stylize it? We’re at the best place in the world in terms of machine learning, so it’s a real opportunity to merge the creative world with the world of computational photography,” Marc Levoy, principal engineer at Google, said in the company's blog post Friday.

© Copyright IBTimes 2024. All rights reserved.