Human Echolocation Can Help Visually Challenged People See Using Sound, Study Finds

Ever wondered why bats can navigate in the empty dark depths of a cave without bouncing off the walls? They use the technique called echolocation. By using the echoes of the sound waves they produce, the bats can ‘gauge’ or ‘see’ the surroundings. Can humans do the same? Well, have you ever tried navigating across a room — with closed eyes — using tongue clicks?

Scientists are trying to use the concept of human echolocation to help visually challenged people. A research, published Aug. 31 in the PLOS Computational Biology journal opens up new avenues for the visually challenged people. The study was conducted by Lore Thaler of Durham University, the United Kingdom, Galen Reich and Michael Antoniou of Birmingham University, U.K., and colleagues.

In this study, three blind adults — expertly trained in echolocation since the age of 15 or younger — were studied. They used the technique for activities such as hiking, visiting new places, and riding bicycles. The findings provided interesting insight into the concept.

The previous researches on human echolocation have not offered clear results. Though it was used extensively, the functioning of the concept was not an area of focus. In the new study, however, the researchers provide an elaborate description of the mouth clicks used by each of the three participants during echolocation. They recorded and analyzed the acoustic properties of several thousand clicks, including the spatial path the sound waves took in an acoustically controlled room.

The data has been able to shed light on the functioning of the technique and it has also revealed how the technique can make the process more efficient. The researchers are hopeful the findings of the study can help in developing human echolocation as an alternative to eye transplants in future.

The study revealed the clicks made by the subjects were more direction-oriented than normal human sounds. While our speech focuses on enunciation and emotions, these sounds are generated to be a short three-millisecond pulse that reflects back in such a way that the minor changes can be detected and perceived. The clicks were between two to four kilohertz in frequency, with some additional strength around 10 kilohertz. In comparison, low-frequency human speech sounds are around 250-100 kHz.

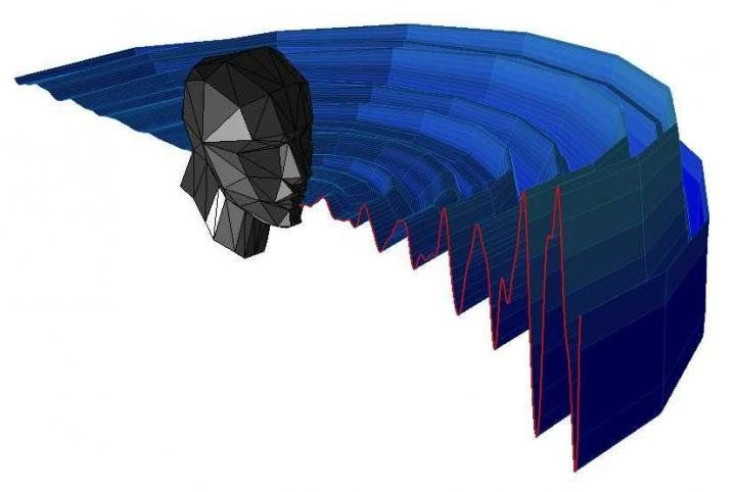

The research has helped fine tune the workings of the sound produced to a very minute extent. A mathematical formula derived from the study can help in synthesizing mouth clicks using machines to help study them better or also as prototypes to new-age visual aids. The researchers plan on using this formula to help build a virtual mouth click generator of sorts to further the advancements made in echolocation and helping integrate this ability into life and hopefully make it better or at least, cooler.

"The results allow us to create virtual human echo locators," Thaler said. "This allows us to embark on an exciting new journey in human echolocation research."

The possibilities of developing this technology are endless. It could help develop war gear and integrate echolocation into a headpiece with a transmitter and receiver to help generate images in dark places, much like infrared. Human echolocation can also help in developing cheaper and less invasive alternatives to eye surgery by making it a viable alternative to vision. It can also help improve imagery in submarines and deep sea explorers, and also aid surveillance and scanning equipment.

© Copyright IBTimes 2024. All rights reserved.