Scrutinizing The Scientific Method: Researchers In Massive Open Access Study Fail To Replicate A Majority Of Psychology Studies

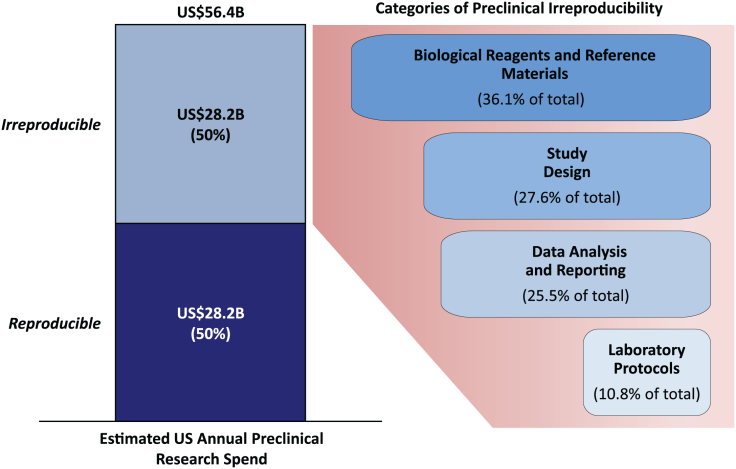

In textbooks, the process of scientific inquiry appears quite elegant: scientists conduct experiments and build upon one another’s results in a slow but steady march toward discovery. But in reality, researchers often can’t reproduce each other’s findings. One recent estimate suggests that the United States loses $28 billion a year, mostly in government funding, due to the inefficiency of researchers who fail to recreate one another’s results.

Now, scientists have some of the clearest evidence of the problem to date. Brian Nosek, a psychology professor at the University of Virginia who directs the Center for Open Science, enlisted 270 researchers in an attempt to replicate the findings of 100 papers published in three leading psychology journals since 2008. In the end, the team was able to reproduce the results of only 39 percent of the studies.

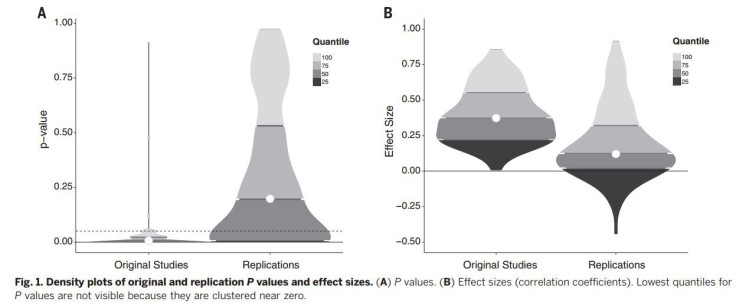

Even among studies successfully replicated, the effect measured by the reproductions was just half as strong on average as in the original studies. Those striking results were published in the journal Science on Thursday. In a departure from previous experiments, Nosek’s group also named on a public website the original authors whose worked they failed to replicate.

“It's probably always been the case that much of the research that's published is not replicable, but that's never been quantified before,” says Howard Kurtzman, acting executive director of science for the American Psychological Association, which publishes two of the three journals evaluated in the project. “Now we have some real progress on that. It shows that scientists can study the scientific method itself, which I think is pretty fascinating.”

Though previous studies have examined the challenge of reproducing scientific results in other fields such as biomedical research, this project provided psychologists a first glimpse into the ease of replicating findings in their own discipline.

The 'Pottery Barn Rule'

The scientific community has historically placed little emphasis on replication efforts. Editors at scientific journals prefer to publish new findings rather than grant valuable page space to the drudgery of verification. With global scientific output doubling every nine years, scientists who want to publish in the top journals face greater competitive pressure to prioritize experiments with the potential for groundbreaking discoveries. At the same time, the number of online journals willing to publish research has exploded, providing ample opportunity for subpar studies to earn publication.

C. Glenn Begley, chief scientific officer for TetraLogic Pharmaceuticals, was one of the first to systematically describe the replication problem in a 2012 Nature comment. He thinks journals should ask researchers to complete or fund replications of their own work. "Many times, you read about an experiment that was only done once,” Begley says. “That's unacceptable.”

Lately, there has been some progress on this front. Some journals have revised their publication policies or dedicated entire sections to replication attempts. Researchers can use a new tool called registered reports to submit the design for a study or replication ahead of time. If an editor accepts the report, the researcher is guaranteed publication in journals such as Perspectives on Psychological Science -- no matter the results. One company even offers to film scientists in their labs so that others can see precisely how an experiment is done.

Rolf Zwaan, a psychology professor at Erasmus University in Rotterdam, Netherlands, who served as a reviewer to Science for the new study, says journals should take on an even larger role. He believes the “Pottery Barn” rule -- if you break it, you buy it -- should apply to scientific literature. In other words, journals that publish studies should pledge to run refutations of that work.

Another improvement suggested by some is to tweak the statistical criteria that journals use to evaluate the claims of scientists. Scientists use a measure known as the "p value" to determine how likely it is that their results are due to random chance. A p value of 0.05 means the likelihood that a study’s results were due to chance is about 5 percent, and typically that value or lower is required to achieve publication in a major research journal.

While 97 percent of the studies evaluated in Nosek’s project had a p value of 0.05 or less when they were originally published, only 36 percent of the studies earned that mark when tested by reviewers. Original studies with a p value of 0.01 or less, however, were much more likely to be successfully replicated. If journal editors lowered the maximum p value acceptable for publication, they would cut out a fair chunk of these nonreplicable results. They would also risk losing studies that show weak but intriguing results.

“I think that's the big question for the field and for scientists in all areas -- what to make of this?” Kurtzman of the American Psychological Association says. “We may need to adjust some of our statistical criteria.”

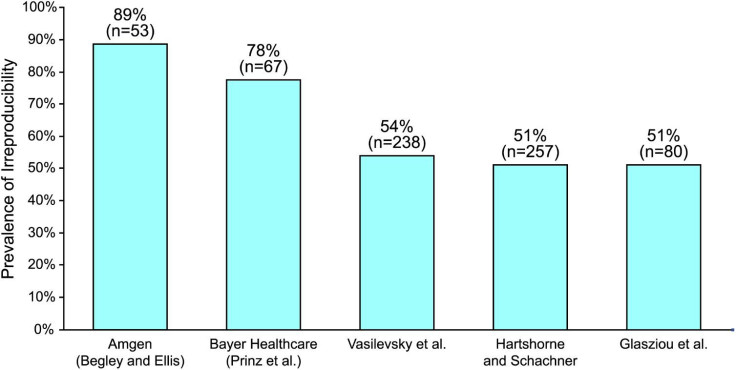

Similar studies have estimated that between 51 percent and 89 percent of biomedical research could not be reproduced in a test. Growing awareness of these issues has recently inspired some scientists to take measures into their own hands. Some now share data on original studies and replication attempts through the Center for Open Science or free online portals such as PsychFileDrawer.

Zwaan says still more scientists should consider replications a part of their daily duties, much in the way they volunteer to review articles for major journals. He has new students complete these attempts to learn their way around the lab. “I feel like replication isn't very creative but it's a necessary aspect of doing science,” he says. “We want people to contribute new ideas and we want people to assess the strength of our foundations.”

A Grain Of Salt

Begley of TetraLogic Pharmaceuticals says the goal isn’t perfection -- science is a messy human endeavor, after all -- but that the number of replications that confirm the results of original studies should far outpace those that find fault, if scientists are doing their jobs correctly.

“I never expect that you’ll ever get precisely the same answer in any biological system when you do the same experiment again,” Begley says. “I do expect things to point in the same general direction. I do expect the title of a paper to be correct.”

Nosek warns that the public should not take the results of the replication project as a mark against science. In some cases, the core concept may simply be more complex than researchers originally thought. Or the replication teams may have missed a small detail in the methods that affected the results. However, the risk of such a misunderstanding was minimized by the fact that replication teams worked with the original authors and independent reviewers to reconstruct the experiments.

Nosek also points out that the inability to replicate findings does not automatically reverse the many theories contained within the original studies. In most cases, new concepts are not established in a single scientific study, anyway. Key findings are buttressed by studies that examine a concept from a variety of angles. Even though the media often homes in on the results of a single study, that’s not the way scientists works.

“The reality is that each of those studies themselves is not definitive -- it's a piece of evidence that in accumulation will lead us to an answer,” he says. “We would love to have that answer be definitive and clear for each study, but that almost never occurs.”

© Copyright IBTimes 2025. All rights reserved.