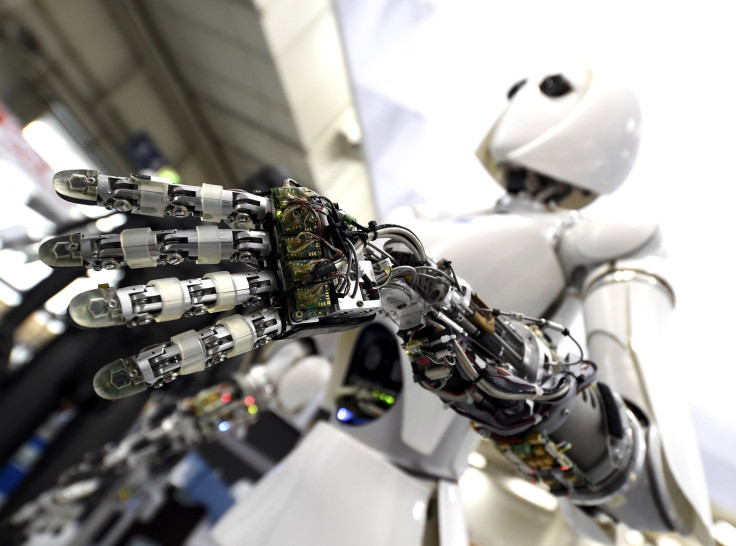

Touchy-Feely Robots: MIT’s New AI System Can Identify Things Using Sight, Touch

Learning about the environment using touch and sight comes easily to humans, but robots can’t use these senses interchangeably like we do. Researchers are now trying to teach robots how to identify objects using both senses.

In our everyday lives, we don’t think twice about lifting a coffee mug or folding a blanket. This sort of knowledge comes with years of trial and error in childhood. Researchers at the Massachusetts Institute of Technology are working on an AI system that can “learn to see by touching and feel by seeing.”

For the research, the team at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) used a KUKA robot arm that had a special tactile sensor called GelSight. KUKA robot arms are designed for industrial uses and GelSight technology helps robots gauge an object’s hardness. The idea was to enable the system understand the relationship between touch and sight.

The team used a web camera to record 12,000 videos of 200 different objects being touched. The videos were broken down into images, which the system then used to connect touch and visual data. The dataset called “VisGel” had three million “visual/tactile-paired images.” The team also used what they call the generative adversarial networks (GANs) to teach the system the connection between vision and touch.

“By looking at the scene, our model can imagine the feeling of touching a flat surface or a sharp edge,” says Yunzhu Li, CSAIL PhD student and lead author on a new paper about the system, according to a news release. “By blindly touching around, our model can predict the interaction with the environment purely from tactile feelings. Bringing these two senses together could empower the robot and reduce the data we might need for tasks involving manipulating and grasping objects.”

For now, the system works only in a controlled environment. The team hopes that in the future, the system will be able to work in other settings. This research will help robots figure out how much strength and grip is needed to pick or hold a particular object, helping them adapt to the world around them.

The paper will be presented at The Conference on Computer Vision and Pattern Recognition in Long Beach, California.

Research teams across the world are trying to build robots that can do multiple tasks. Recently, other researchers at MIT had developed a bot that can play Jenga, a game that requires both vision and touch.

© Copyright IBTimes 2025. All rights reserved.