Meta's Policy Criticized After Oversight Board's Review Of Biden Video

KEY POINTS

- Meta's Oversight Board released a statement following a review of an edited video of Joe BIden

- The board upheld Meta's decision to allow the video to stay online as it did not violate the exising policy

- However, the board urged Meta to make changes to its current Manipulated Media policy

Facebook parent company Meta faced criticism for its "incoherent and confusing" policies on manipulated media, with calls for reform ahead of elections in 50 countries involving over 2 billion voters in 2024.

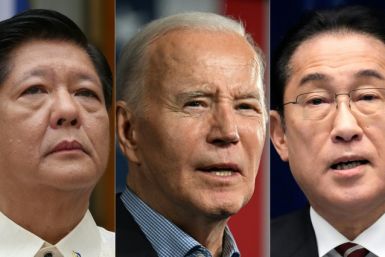

The Meta Oversight Board, which runs independently of Meta and is funded through a grant by the company, said in a Monday statement that Meta's Manipulated Media policy needs to be reconsidered. The recommendation came after the board reviewed an edited video of President Joe Biden was uploaded in May last year.

The video appears as though Biden is inappropriately touching his adult granddaughter's chest and is accompanied by a caption describing him as a "pedophile."

The original version of the video shows the president placing an "I Voted" sticker on his granddaughter's shirt after she indicated where to place it. He then kissed her on the cheek.

Following a review, the board upheld Meta's recent decision to allow the edited video of Biden to stay online as it does not violate the existing Manipulated Media policy.

"Since the video in this post was not altered using AI and it shows President Biden doing something he did not do (not something he didn't say), it does not violate the existing policy," the board said in the Monday statement.

While the board upheld the decision not to take down the video, it argued that Meta's Manipulated Media policy "is lacking in persuasive justification, is incoherent and confusing to users, and fails to specify the harms it is seeking to prevent."

"In short, the policy should be reconsidered," the Oversight Board added.

The board said the policy should not only apply to video content, content altered or generated by Artificial Intelligence (AI), and content that makes people appear to say words they did not say. This application is "too narrow" and must be extended to cover audio and content that shows people doing things they did not do, the statement said.

The board also recommended that Meta makes changes to the policy quickly as 50 countries across the world will be holding elections this year.

Meta acknowledged the review and said it would implement the board's decision after deliberation.

© Copyright IBTimes 2024. All rights reserved.