Artificial Intelligence The Weapon Of The Next Cold War?

It is easy to confuse the current geopolitical situation with that of the 1980s. The United States and Russia each accuse the other of interfering in domestic affairs. Russia has annexed territory over U.S. objections, raising concerns about military conflict.

As during the Cold War after World War II, nations are developing and building weapons based on advanced technology. During the Cold War, the weapon of choice was nuclear missiles; today it’s software, whether its used for attacking computer systems or targets in the real world.

Russian rhetoric about the importance of artificial intelligence is picking up – and with good reason: As artificial intelligence software develops, it will be able to make decisions based on more data, and more quickly, than humans can handle. As someone who researches the use of AI for applications as diverse as drones, self-driving vehicles and cybersecurity, I worry that the world may be entering – or perhaps already in – another cold war, fueled by AI. And I’m not alone.

Modern Cold War

Just like the the Cold War in the 1940s and 1950s, each side has reason to fear its opponent gaining a technological upper hand. In a recent meeting at the Strategic Missile Academy near Moscow, Russian President Vladmir Putin suggested that AI may be the way Russia can rebalance the power shift created by the U.S. outspending Russia nearly 10-to-1 on defense each year. Russia’s state-sponsored RT media reported AI was “key to Russia beating [the] U.S. in defense.”

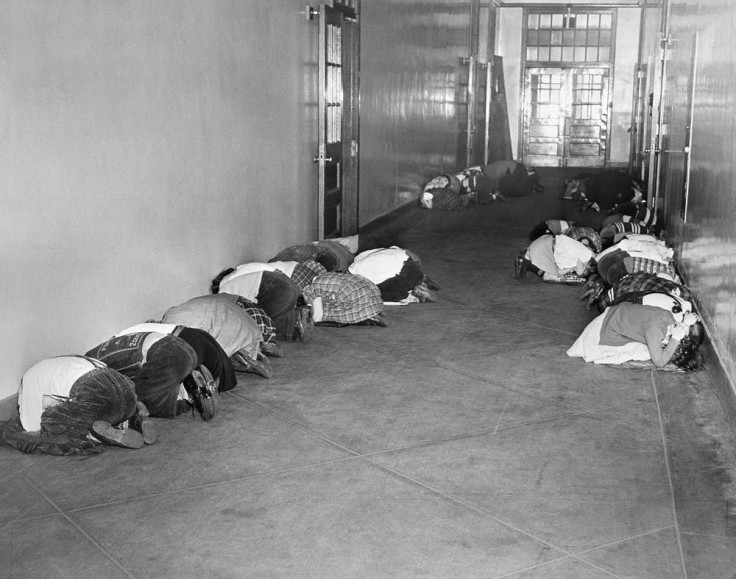

It sounds remarkably like the rhetoric of the Cold War, where the United States and the Soviets each built up enough nuclear weapons to kill everyone on Earth many times over. This arms race led to the concept of mutual assured destruction: Neither side could risk engaging in open war without risking its own ruin. Instead, both sides stockpiled weapons and dueled indirectly via smaller armed conflicts and political disputes.

Now, more than 30 years after the end of the Cold War, the U.S. and Russia have decommissioned tens of thousands of nuclear weapons. However, tensions are growing. Any modern-day cold war would include cyberattacks and nuclear powers’ involvement in allies’ conflicts. It’s already happening.

Both countries have expelled the other’s diplomats. Russia has annexedpart of Crimea. The Turkey-Syria border war has even been called a “proxy war” between the U.S. and Russia.

Both countries – and many others too – still have nuclear weapons, but their use by a major power is still unthinkable to most. However, recent reports show increased public concern that countries might use them.

A World Of Cyberconflict

Cyberweapons, however, particularly those powered by AI, are still considered fair game by both sides.

Russia and Russian-supporting hackers have spied electronically, launched cyberattacks against power plants, banks, hospitals and transportation systems – and against U.S. elections. Russian cyberattackers have targeted the Ukraine and U.S. allies Britain and Germany.

The U.S. is certainly capable of responding and may have done so.

Putin has said he views artificial intelligence as “the future, not only for Russia, but for all humankind.” In September 2017, he told students that the nation that “becomes the leader in this sphere will become the ruler of the world.” Putin isn’t saying he’ll hand over the nuclear launch codes to a computer, though science fiction has portrayed computers launching missiles. He is talking about many other uses for AI.

Use Of AI For Nuclear Weapons Control

Threats posed by surprise attacks from ship- and submarine-basednuclear weapons and weapons placed near a country’s borders may lead some nations to entrust self-defense tactics – including launching counterattacks – to the rapid decision-making capabilities of an AI system.

In case of an attack, the AI could act more quickly and without the potential hesitation or dissent of a human operator.

A fast, automated response capability could help ensure potential adversaries know a nation is ready and willing to launch, the key to mutual assured destruction‘s effectiveness as a deterrent.

AI Control Of Non-Nuclear Weapons

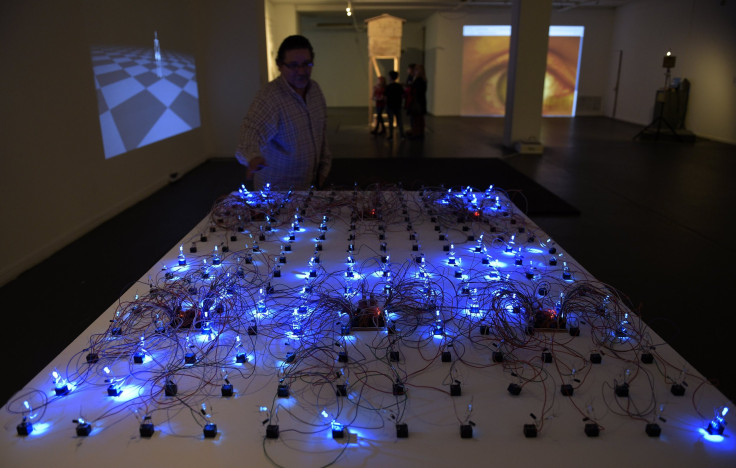

AI can also be used to control non-nuclear weapons including unmanned vehicles like drones and cyberweapons. Unmanned vehicles must be able to operate while their communications are impaired – which requires onboard AI control. AI control also prevents a group that’s being targetedfrom stopping or preventing a drone attack by destroying its control facility, because control is distributed, both physically and electronically.

Cyberweapons may, similarly, need to operate beyond the range of communications. And reacting to them may require such rapid response that the responses would be best launched and controlled by AI systems.

AI-coordinated attacks can launch cyber or real-world weapons almost instantly, making the decision to attack before a human even notices a reason to. AI systems can change targets and techniques faster than humans can comprehend, much less analyze. For instance, an AI system might launch a drone to attack a factory, observe drones responding to defend, and launch a cyberattack on those drones, with no noticeable pause.

The Importance Of AI Development

A country that thinks its adversaries have or will get AI weapons will want to get them too. Wide use of AI-powered cyberattacks may still be some time away.

Countries might agree to a proposed Digital Geneva Convention to limit AI conflict. But that won’t stop AI attacks by independent nationalist groups, militias, criminal organizations, terrorists and others – and countries can back out of treaties. It’s almost certain, therefore, that someone will turn AI into a weapon – and that everyone else will do so too, even if only out of a desire to be prepared to defend themselves.

With Russia embracing AI, other nations that don’t or those that restrict AI development risk becoming unable to compete – economically or militarily – with countries wielding developed AIs. Advanced AIs can create advantage for a nation’s businesses, not just its military, and those without AI may be severely disadvantaged. Perhaps most importantly, though, having sophisticated AIs in many countries could provide a deterrent against attacks, as happened with nuclear weapons during the Cold War.

This article was originally published in The Conversation. Read the original article.

Jeremy Straub is an Assistant Professor of Computer Science at North Dakota State University

<img src="https://counter.theconversation.com/content/86086/count.gif?distributor=republish-lightbox-advanced" alt="The Conversation" width="1" height="1" />