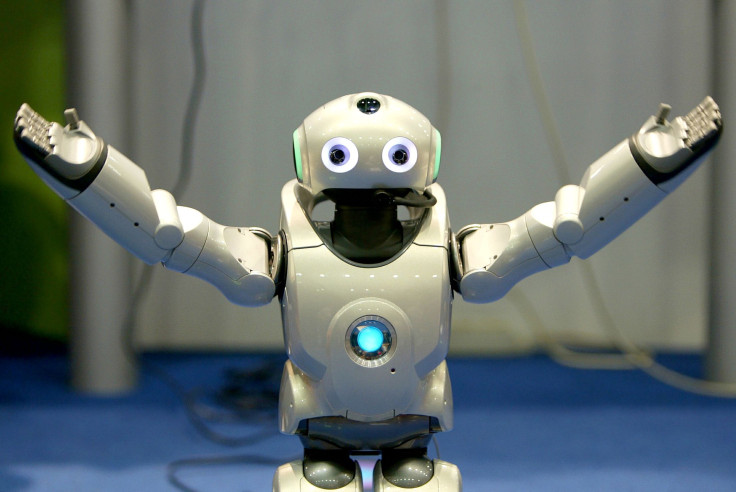

Artificial Intelligence: EU To Debate Robots’ Legal Rights After Committee Calls For Mandatory AI ‘Kill Switches’

In his seminal book “Superintelligence,” the Swedish philosopher Nick Bostrom highlights the need to draft a set of rules to keep machines under human control much before an inevitable “intelligence explosion” occurs. European lawmakers, it seems, are listening.

On Thursday, the European Parliament’s legal affairs committee approved a wide-ranging report that outlines a possible framework under which humans would interact with AI and robots. Among other things, the report mulls over the need to create a specific legal status for robots — one that designates them as “electronic persons” — and the need for designers to incorporate a “kill switch” into their designs.

In addition, the report also calls for the creation of a European agency for robotics and AI that would be capable of responding to new opportunities and challenges arising from technological advancements in robotics.

“Now that humankind stands on the threshold of an era when ever more sophisticated robots, bots, androids and other manifestations of artificial intelligence seem poised to unleash a new industrial revolution, which is likely to leave no stratum of society untouched, it is vitally important for the legislature to consider all its implications,” the report states. “The ‘soft impacts’ on human dignity may be difficult to estimate, but will still need to be considered if and when robots replace human care and companionship, and ... questions of human dignity also can arise in the context of ‘repairing’ or enhancing human beings.”

The idea of fitting robots with a kill switch is not new. Last June, scientists at Google’s artificial intelligence division DeepMind also announced they were developing a similar kill switch to ensure that intelligent machines do not go all Terminator on us.

“Now and then it may be necessary for a human operator to press the big red button to prevent the agent from continuing a harmful sequence of actions,” the researchers said at the time.

Of course, in a real-life situation, the “control problem,” as Bostrom calls it in his book, may prove more intractable. If a machine does become superintelligent, Bostrom says, it could very easily find a way to outwit its human operators, disabling any kill switches in the process.

In order to prevent this dystopian outcome, which still lies firmly in the realm of science fiction, the authors of the report turn to rules drafted by Isaac Asimov, one of the grand masters of sci-fi. The report suggests using Asimov’s three laws of robotics — the foremost among them stating that “a robot may not injure a human being or, through inaction, allow a human being to come to harm” — as a general principle for designers, producers and operators of robots.

The proposals of the report will now be debated by a full house of the European Parliament next month, where they would need to be approved by absolute majority to become EU law.

© Copyright IBTimes 2025. All rights reserved.