Google's AI Tricked By Students Into Thinking 3D-Printed Turtle Is A Rifle

Artificial Intelligence ( AI ) has taken over almost every aspect of our existence. We are slowly moving towards a society that works hand in hand with machines capable of making simple decisions. From self-driving cars to automatic vacuum bots, we are steadily tying our existence to machines.

But, humans designed the AI machines and will always have the upper hand. A chink-less AI has not been created and we find computer hacking enthusiasts constantly getting the better of computer programs specifically designed to keep them out. A startling revelation about AI’s susceptibility to rigid decision making has been made.

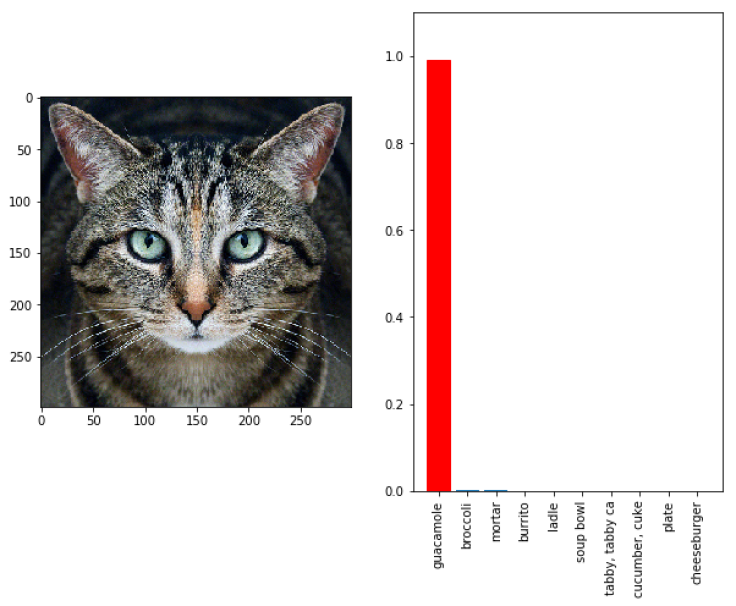

A group of students, called labsix, from Massachusetts Institute of Technology (MIT), published a paper on Arxiv and also a press release titled, "Fooling Neural Networks in the Physical World with 3D Adversarial Objects," that says they have successfully tricked Google's Inception V3 image classifier AI released for researchers to tinker with.

They 3D-printed an adversarial image, which is an image designed to trick machine software and AI, of a turtle that the Google AI identifies as a rifle from all angles. Yes, a rifle.

According to a report by the Verge, "in the AI world, these are pictures engineered to trick machine vision software, incorporating special patterns that make AI systems flip out. Think of them as optical illusions for computers. You can make adversarial glasses that trick facial recognition systems into thinking you’re someone else, or can apply an adversarial pattern to a picture as a layer of near-invisible static. Humans won’t spot the difference, but to an AI it means that a panda has suddenly turned into a pickup truck."

There are groups of people looking to counter these attacks and make the AI better. But, what is special about this attack is its efficiency. Most adversarial images often work only at a particular angle. When the camera zooms in or moves, the software often catches on. This attack managed to confound the AI at almost every angle and distance.

"In concrete terms, this means it's likely possible that one could construct a yard sale sign which to human drivers appears entirely ordinary, but might appear to a self-driving car as a pedestrian which suddenly appears next to the street," labsix were quoted in the Verge article as saying. "Adversarial examples are a practical concern that people must consider as neural networks become increasingly prevalent (and dangerous)," they added.

The study states that their new method "Expectation Over Transformation" managed to make a turtle that looks like a rifle, a baseball that reads as an espresso and numerous non-3D-printed tests. The classes they chose were at random. The objects work from most, but not all angles.

According to the Verge report, "labsix needed access to Google’s vision algorithm in order to identify its weaknesses and fool it. This is a significant barrier for anyone who would try and use these methods against commercial vision systems deployed by, say, self-driving car companies. However, other adversarial attacks have been shown to work against AI sight-unseen." The labsix team is working on this problem next.

© Copyright IBTimes 2024. All rights reserved.