Supernova 'Kill Zone' May Not Be As Big As Previously Believed

How far from Earth would a supernova have to occur to trigger a mass extinction? In 2016, a team of researchers thought about the question and estimated that a supernova’s “kill zone” would be roughly 30 light-years. They said that any supernova located beyond this would not cause widespread damage on Earth, but could still seed the planet with the substance of life.

Read: Supernova 1987A: The Story So Far

Now, in a new study to be published in the Astrophysical Journal, a team of astrophysicists revised the kill zone estimate for supernovae. They further argue that the estimated distance of the supernova thought to have occurred roughly 2.6 million years ago (which may have blasted Earth’s surface with enough radioactive debris to influence its climate) should be cut in half.

“People estimated the ‘kill zone’ for a supernova in a paper in 2003, and they came up with about 25 light-years from Earth. Now we think maybe it’s a bit greater than that,” study lead author Adrian Melott, a professor of physics and astronomy at the University of Kansas, said in a statement. “We don’t know precisely, and of course it wouldn’t be a hard-cutoff distance. It would be a gradual change. But we think something more like 40 or 50 light-years. So, an event at 150 light-years should have some effects here but not set off a mass extinction.”

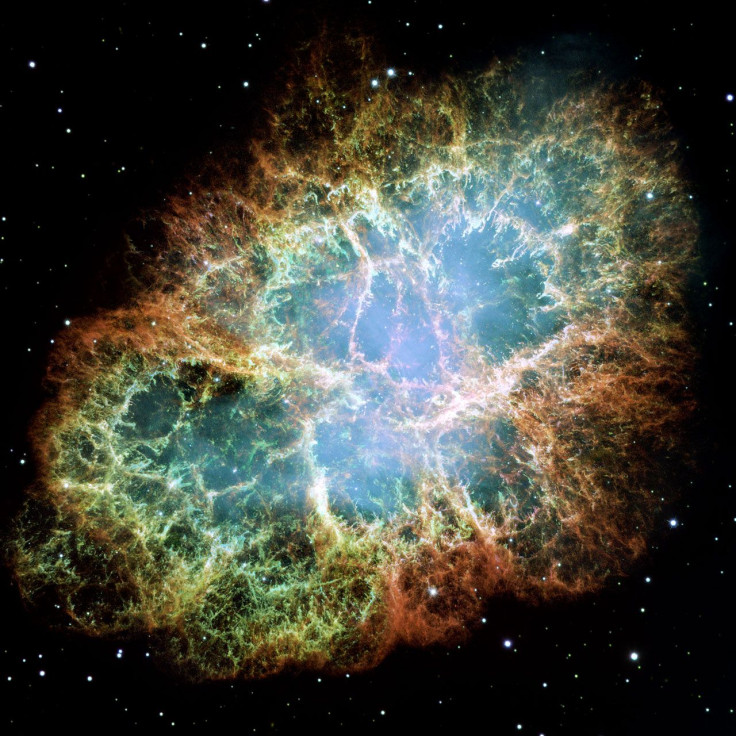

When a star goes supernova, it seeds the universe with elements such as carbon, nitrogen, oxygen and iron — the “star stuff” that is the basic component of all life as we know it. One of the radioactive isotopes spilt out when a star explodes into a supernova is iron-60, which has a half-life of 2.6 million years.

In 1999, researchers discovered significant levels of iron-60 in Earth’s deep-sea crusts, indicating that sometime in our planet’s tumultuous past, it had been sprayed with the radioactive material. Then, last year, after analyzing crust samples collected from the Pacific, Atlantic and Indian oceans, scientists estimated that our planet had, sometime between 2.6 million and 1.5 million years ago, been buffeted by supernovae shock waves — ones that left their mark not only on Earth’s surface but also affected its atmosphere.

“The cosmic rays from the supernova would be getting down into the lower atmosphere,” Melott said. “All kinds of elementary particles are penetrating from altitudes of 45-10 miles, and many muons get to the ground. The effect of the muons is greater — it’s not overwhelming, but imagine every organism on Earth gets the equivalent of several CT scans per year. CT scans have some danger associated with them. Your doctor wouldn’t recommend a CT scan unless you really needed it.”

So, while this would definitely not have triggered a mass extinction event, it would have had a “fairly bad” impact on organisms — cancer and mutation being among its most obvious consequences. Such supernovae may even have played a role in human evolution, as atmospheric ionization caused by the explosions would have caused a big increase in cloud-to-ground lightning, which, in turn, would've destroyed habitats of larger mammals.

“Lightning is the number one cause of wildfires other than humans. So, we’d expect a whole lot more wildfires, and that could change the ecology of different regions, such as a loss of tree cover in northeast Africa, which could even have something to do with human evolution,” Melott said. “The Great Plains has recently been largely kept grass-covered by a bunch of wildfires. A big increase in lightning would also mean a big increase in nitrate coming out of the rain, and that would act like fertilizer.”

© Copyright IBTimes 2025. All rights reserved.