Artificial Intelligence-Enhanced Journalism Offers A Glimpse Of The Future Of The Knowledge Economy

Much as robots have transformed entire swaths of the manufacturing economy, artificial intelligence and automation are now changing information work, letting humans offload cognitive labor to computers. In journalism, for instance, data mining systems alert reporters to potential news stories, while newsbots offer new ways for audiences to explore information. Automated writing systems generate financial, sports and elections coverage.

A common question as these intelligent technologies infiltrate various industries is how work and labor will be affected. In this case, who – or what – will do journalism in this AI-enhanced and automated world, and how will they do it?

The evidence I’ve assembled in my new book “Automating the New: How Algorithms are Rewriting the Media” suggests that the future of AI-enabled journalism will still have plenty of people around. However, the jobs, roles and tasks of those people will evolve and look a bit different. Human work will be hybridized – blended together with algorithms – to suit AI’s capabilities and accommodate its limitations.

Augmenting, not substituting

Some estimates suggest that current levels of AI technology could automate only about 15% of a reporter’s job and 9% of an editor’s job. Humans still have an edge over non-Hollywood AI in several key areas that are essential to journalism, including complex communication, expert thinking, adaptability and creativity.

Reporting, listening, responding and pushing back, negotiating with sources, and then having the creativity to put it together – AI can do none of these indispensable journalistic tasks. It can often augment human work, though, to help people work faster or with improved quality. And it can create new opportunities for deepening news coverage and making it more personalized for an individual reader or viewer.

Newsroom work has always adapted to waves of new technology, including photography, telephones, computers – or even just the copy machine. Journalists will adapt to work with AI, too. As a technology, it is already and will continue to change newswork, often complementing but rarely substituting for a trained journalist.

New work

I’ve found that more often than not, AI technologies appear to actually be creating new types of work in journalism.

Take for instance the Associated Press, which in 2017 introduced the use of computer vision AI techniques to label the thousands of news photos it handles every day. The system can tag photos with information about what or who is in an image, its photographic style, and whether an image is depicting graphic violence.

The system gives photo editors more time to think about what they should publish and frees them from spending lots of time just labeling what they have. But developing it took a ton of work, both editorial and technical: Editors had to figure out what to tag and whether the algorithms were up to the task, then develop new test data sets to evaluate performance. When all that was done, they still had to supervise the system, manually approving the suggested tags for each image to ensure high accuracy.

Stuart Myles, the AP executive who oversees the project, told me it took about 36 person-months of work, spread over a couple of years and more than a dozen editorial, technical and administrative staff. About a third of the work, he told me, involved journalistic expertise and judgment that is especially hard to automate. While some of the human supervision may be reduced in the future, he thinks that people will still need to do ongoing editorial work as the system evolves and expands.

Semi-automated content production

In the United Kingdom, the RADAR project semi-automatically pumps out around 8,000 localized news articles per month. The system relies on a stable of six journalists who find government data sets tabulated by geographic area, identify interesting and newsworthy angles, and then develop those ideas into data-driven templates. The templates encode how to automatically tailor bits of the text to the geographic locations identified in the data. For instance, a story could talk about aging populations across Britain, and show readers in Luton how their community is changing, with different localized statistics for Bristol. The stories then go out by wire service to local media who choose which to publish.

The approach marries journalists and automation into an effective and productive process. The journalists use their expertise and communication skills to lay out options for storylines the data might follow. They also talk to sources to gather national context, and write the template. The automation then acts as a production assistant, adapting the text for different locations.

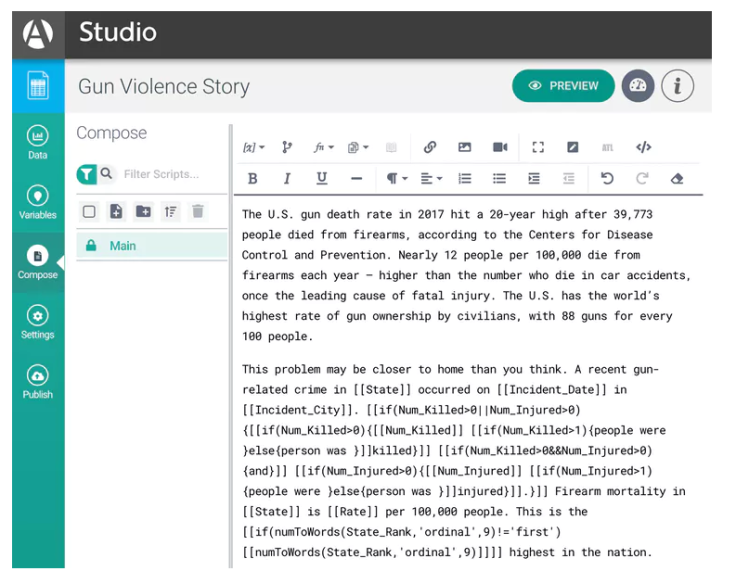

RADAR journalists use a tool called Arria Studio, which offers a glimpse of what writing automated content looks like in practice. It’s really just a more complex interface for word processing. The author writes fragments of text controlled by data-driven if-then-else rules. For instance, in an earthquake report you might want a different adjective to talk about a quake that is magnitude 8 than one that is magnitude 3. So you’d have a rule like, IF magnitude > 7 THEN text = “strong earthquake,” ELSE IF magnitude < 4 THEN text = “minor earthquake.” Tools like Arria also contain linguistic functionality to automatically conjugate verbs or decline nouns, making it easier to work with bits of text that need to change based on data.

Authoring interfaces like Arria allow people to do what they’re good at: logically structuring compelling storylines and crafting creative, nonrepetitive text. But they also require some new ways of thinking about writing. For instance, template writers need to approach a story with an understanding of what the available data could say – to imagine how the data could give rise to different angles and stories, and delineate the logic to drive those variations.

Supervision, management or what journalists might call “editing” of automated content systems are also increasingly occupying people in the newsroom. Maintaining quality and accuracy is of the utmost concern in journalism.

RADAR has developed a three-stage quality assurance process. First, a journalist will read a sample of all of the articles produced. Then another journalist traces claims in the story back to their original data source. As a third check, an editor will go through the logic of the template to try to spot any errors or omissions. It’s almost like the work a team of software engineers might do in debugging a script – and it’s all work humans must do, to ensure the automation is doing its job accurately.

Developing human resources

Initiatives like those at the Associated Press and at RADAR demonstrate that AI and automation are far from destroying jobs in journalism. They’re creating new work – as well as changing existing jobs. The journalists of tomorrow will need to be trained to design, update, tweak, validate, correct, supervise and generally maintain these systems. Many may need skills for working with data and formal logical thinking to act on that data. Fluency with the basics of computer programming wouldn’t hurt either.

As these new jobs evolve, it will be important to ensure they’re good jobs – that people don’t just become cogs in a much larger machine process. Managers and designers of this new hybrid labor will need to consider the human concerns of autonomy, effectiveness and usability. But I’m optimistic that focusing on the human experience in these systems will allow journalists to flourish, and society to reap the rewards of speed, breadth of coverage and increased quality that AI and automation can offer.

Nicholas Diakopoulos is an assistant professor of Communication Studies, Northwestern University.

This article originally appeared in The Conversation. Read the article here.